Looking for the Best Machine Learning Algorithms for ML Beginners in 2022? Well, In this blog, we will list down the top 10 machine learning algorithms that data scientists need to know. After reading this blog, you will be able to understand the basic logic behind some of the popular and incredibly ingenious machine learning algorithms that have been used by the trading community and serve as the foundation stone on which you aim to create the best machine learning algorithm.

The use and adoption of machine learning have grown significantly over the past decade. This era can be considered one of the most revolutionary and technologically important. The impact of machine learning is so much that it has already begun to dominate our lives.

We live in a world where all the things that used to be called manual tasks are turning into automated tasks and the reason this is happening is because of machine learning. This is the reason for the advent of many interactive software applications, robots performing surgery, chess computers, converting mainframe computers to PCs, self-driving cars, and e-learning solutions. interactions, and more.

Technology is constantly changing at an alarming rate. If we analyze the advances that computers have made in the past few years, one thing that can easily be predicted is the bright future ahead of us.

With that said, the reason why data scientists can solve an increasingly complex nature problem with technological solutions is the use of special machine learning algorithms that have been developed to solve the problem. solve these problems perfectly. And to say the least, the results so far are remarkable.

In this blog, we will list down top 10 machine learning algorithms that data scientists need to know. After reading this blog, you will be able to understand the basic logic behind some of the popular and incredibly ingenious machine learning algorithms that have been used by the trading community and serve as the foundation stone on which you aim to create the best machine learning algorithm.

There are three most common types of machine learning algorithms, namely supervised learning, unsupervised learning, and reinforcement learning. All three techniques used in this list of 10 popular machine learning algorithms.

List of Top 10 Machine Learning Algorithms for ML Beginners

- Linear Regression

- Logistic Regression

- K-Nearest Neighbor(KNN) Algorithm

- Support Vector Machine (SVM) Algorithm

- Naïve Bayes Algorithm

- Decision Tree

- K-Means Clustering Algorithm

- Random Forest

- Recurrent Neural Network (RNN)

- Dimensionality Reduction Algorithms

1. Linear Regression

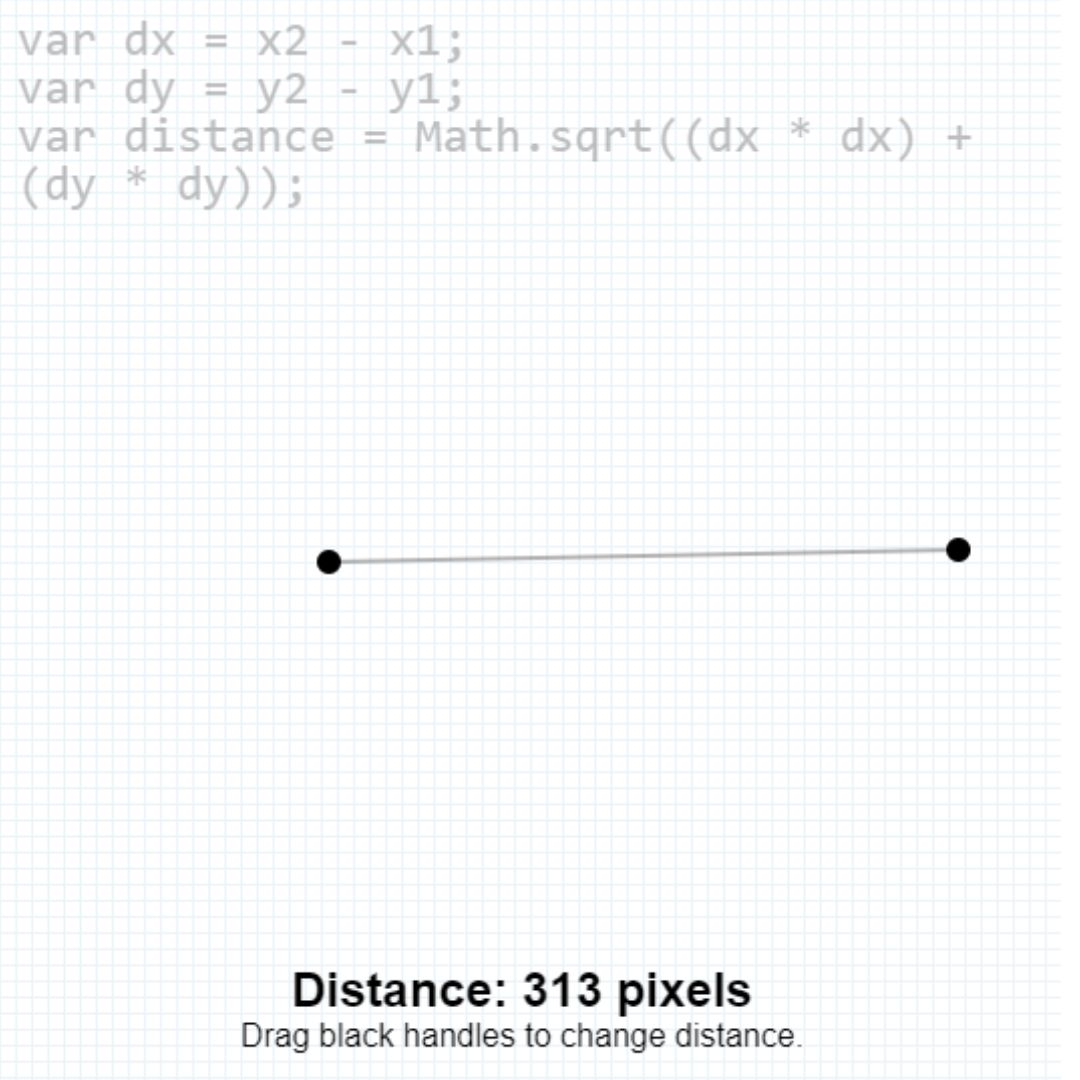

Linear regression is one of the simplest and most popular machine learning algorithms. It is a statistical method used for predictive analysis. Linear regression makes predictions for continuous/real or numerical variables like sales, wages, age, product prices, etc.

Linear regression algorithm shows a linear relationship between a dependent variable (y) and one or more independent variables (y), hence it is called linear regression. Since linear regression shows a linear relationship, it means that it finds how the value of the dependent variable changes depending on the value of the independent variable.

The linear regression model provides a straight slope showing the relationship between variables. Consider the image below:

Mathematically, we can represent the linear regression as follows:

y = a0+a1x+ ε

Here,

- Y = Dependent variable (Objective variable)

- X = Independent variable (Predictor)

- a0 = Line intersection (Gives an additional degree of freedom)

- a1 = Linear regression coefficient (scale factor for each input value).

- ε = random error

The values of the x and y variables are the training data set to represent the linear regression model.

2. Logistic Regression

Linear regression predictions are continuous values (i.e. rainfall in cm), and logistic regression predictions are discrete values (i.e. students pass or fail) after applying a function change.

Logistic regression is best suited for binary classification: data set where y = 0 or 1, where 1 represents the default class. For example, to predict whether an event will happen, there are only two possibilities: it happens (which we denote by 1) or it doesn't happen (0). So if we are predicting whether a patient will get sick, we will label the sick patients using the value 1 in our data set.

Logistic regression is named after the transformation function it uses, called the logistic function h(x) = 1 / (1 + ex). This forms an S-shaped curve.

In logistic regression, the output takes the form of the default class probability. Since it is a probability, the output is from 0 to 1. So for example, if we are trying to predict whether a patient will get sick, we already know that the sick patient gets a score is 1, so if our algorithm assigns a score of 0.98 to a patient, he thinks it's very likely that this patient is sick.

This output (y value) is produced by logarithmic transformation of x value, using the logistic function h(x) = 1 / (1 e ^ -x). A threshold is then applied to force this probability into a binary classifier.

The logistic regression equation P(x) = e^(b0+b1x) / (1 + e(b0 + b1x)) can be transformed into ln(p(x) / 1-p(x)) = b0 + b1x.

The objective of logistic regression is to use the training data to find the values of the coefficients b0 and b1 that minimize the error between the predicted result and the actual result. These coefficients are estimated using the maximum likelihood estimation technique.

3. KNN Algorithm

This algorithm can be applied to both classification and regression problems. Apparently, in data science, it is more widely used to solve classification problems. It is a simple algorithm that stores all available cases and ranks all new cases by taking the majority of votes of its k neighbors. The case is then assigned to the class with which it has the most in common. A distance function performs this measurement.

KNN can be easily understood by comparing it with real life. For example, if you want information about a person, tell their friends and colleagues!

Things to consider before choosing KNN algorithm:

- KNN is computationally expensive.

- Variables need to be normalized, otherwise higher range variables can skew the algorithm.

- Data still need to be processed first.

4. SVM Algorithm

SVM was originally used for data analysis. First, a set of training examples is fed into the SVM algorithm, which belongs to one or another category. Then, the algorithm that builds a model begins to assign new data to one of the categories it learned during the training phase.

In the SVM algorithm, a hyperplane is created to split between categories. When the SVM algorithm processes a new data point and depending on which side it appears on, it is placed in one of the classes.

As far as trading is concerned, an SVM algorithm can be built that classifies security data into favorable buy, sell, or neutral classes and then classifies the test data according to rules.

5. Naive Bayes Algorithm

The Naive Bayes classifier assumes that the presence of a particular feature in a class is not related to the presence of any other feature.

Although these features are related, the Naive Bayes classifier considers all these properties independently when calculating the probability of a particular outcome.

Naive Bayesian models are easy to build and useful for large data sets. It is simple and it is known to outperform even the most complex classification methods.

6. Decision Tree

Decision trees are one of the most commonly used machine learning algorithms. It is basically a supervised learning algorithm that works effectively to classify problems. It is used to classify both continuous and specific variables.

Often used in statistics and data analysis for predictive modeling purposes, the structure of this algorithm is represented using leaves and branches. The objective function properties are based on the branches of the decision tree, the objective function values are stored in the leaves, and the remaining nodes contain attributes whose cases are different.

To classify a new case, data scientists have to go down to the top to give the appropriate value. The goal is to create a model that can predict the value of the target variable depending on multiple input variables.

7. K-Means Clustering Algorithm

It is an unsupervised learning algorithm for solving clustering problems. The data set is classified into a specific number of clusters (let's call this number K) in such a way that all the data points in a cluster are homogeneous and heterogeneous compared to the data in other clusters.

How K-means form clusters:

- The K-means algorithm chooses a number of k points, called centroids, for each cluster.

- Each data point forms a cluster with nearest centroids, i.e. K clusters.

- It now creates new centroids based on existing cluster members.

- With these new centroids, the closest distance for each data point is determined. This process is repeated until the centroids do not change.

8. Random Forest

A group of decision trees is often called a random forest. To classify a new object depending on its properties, all the trees are classified and they vote for the class. The forest then chooses the classifier with the maximum number of votes over all the other trees in the forest. All plants are planted and they grow as mentioned below.

- The number of instances in the training set is considered "N". A sample of "N" cases is then randomly taken and this sample is used as the training set to grow the tree.

- At the time the input variables are M, then a number m < M is labeled so that at each node the variable m is randomly selected from the M variables and the best distribution over m can be used for mode separation. The value m obtained is called a constant throughout the whole process.

- Each tree is fertilized as long as it is not pruned.

9. Recurrent Neural Network (RNN)

Did you know that Siri and Google Assistant use RNNs in their programming? RNN is basically a type of neural network with memory attached to each node which makes it easier to process sequential data i.e. a data unit that depends on the previous node.

One way to explain the advantage of an RNN over a regular neural network is that we have to process a word character by character. If the word is "trading", a regular neural network node will forget the "t" character when it switches to "d" while a repeating neural network will remember the character because it has its own memory.

10. Dimensionality Reduction Algorithms

In machine learning classification problems, there are often too many factors to base the final classification on. These factors are essentially variables called characteristics. The higher the number of features, the harder it is to visualize the workout set and then execute it. Sometimes most of these features are correlated and therefore redundant. This is where dimensionality reduction algorithms come into play. Dimension reduction is the process of reducing the number of random variables to be considered, obtaining a set of key variables. It can be divided into feature selection and feature extraction.

There are two components of dimensionality reduction:

- Feature selection: In this case, we try to find a subset of the original set of variables or features, to obtain a smaller subset that can be used to model the problem. This usually involves three ways: Filter, Wrapper, and Embedded

- Feature Extraction: This reduces the data in the high dimensional space to the lower dimensional space, i.e. the space is less than zero. of the sizes.

That’s a wrap!

Thank you for taking the time to read this article! I hope you found it informative and enjoyable. If you did, please consider sharing it with your friends and followers. Your support helps me continue creating content like this.

Stay updated with our latest content by signing up for our email newsletter! Be the first to know about new articles and exciting updates directly in your inbox. Don't miss out—subscribe today!

If you'd like to support my work directly, you can buy me a coffee . Your generosity is greatly appreciated and helps me keep bringing you high-quality articles.

Thanks!

Faraz 😊