Apache Spark is a fast and powerful big data processing framework that helps you process large amounts of data in a distributed, fault-tolerant, and self-organizing cluster. This article is a quick guide for the absolute beginner!

Apache Spark is a fast, general-purpose data processing engine. It can be used for big data processing, machine learning, and streaming analytics.

In this article, we will give you an overview of Apache Spark, and explain how it can be used for data analysis. We will also provide a simple guide for the absolute beginner. So start your journey with Apache Spark!

What is Apache Spark?

Apache Spark is a big data processing engine that was created by the Apache Software Foundation. It is an open-source platform that enables data scientists to processes large amounts of data quickly and effectively. According to the Apache Spark website, "Spark is designed to enable fast, parallel data analysis using general-purpose computing clusters."

Spark can be used for a variety of tasks, such as machine learning, analytics, and graph processing. It can also be used in conjunction with other big data tools, such as Hadoop or Hive.

Features of Apache Spark

Here are some of the key features of Apache Spark:

- High performance: Apache Spark can handle large datasets with ease, making it an ideal tool for data analysis tasks.

- Broad range of features: Apache Spark has a wide range of features that make it an ideal choice for data analysis tasks.

- Easy to use: Apache Spark is easy to use and can be used by beginners without any prior experience in data processing.

Types of Data in Apache Spark

Spark supports various data types, including text, JSON, and RDDs (resilient distributed datasets).

Text Data: Text data is usually a string or text file that contains human-readable information. In Spark, text data can be processed using the Text API. The Text API allows you to perform tasks such as text parsing, sentiment analysis, and machine learning.

JSON Data: JSON (JavaScript Object Notation) is a lightweight data format that is commonly used in web applications. JSON data can be processed using the JSON API. The JSON API allows you to perform tasks such as object transformation and data querying.

RDDs (Resilient Distributed Datasets): RDDs are a type of dataset that stores summary information about entities in a database-like structure. You can use RDDs to process large amounts of data by streaming it into Spark. RDDs are resilient because they are able to restart after failures.

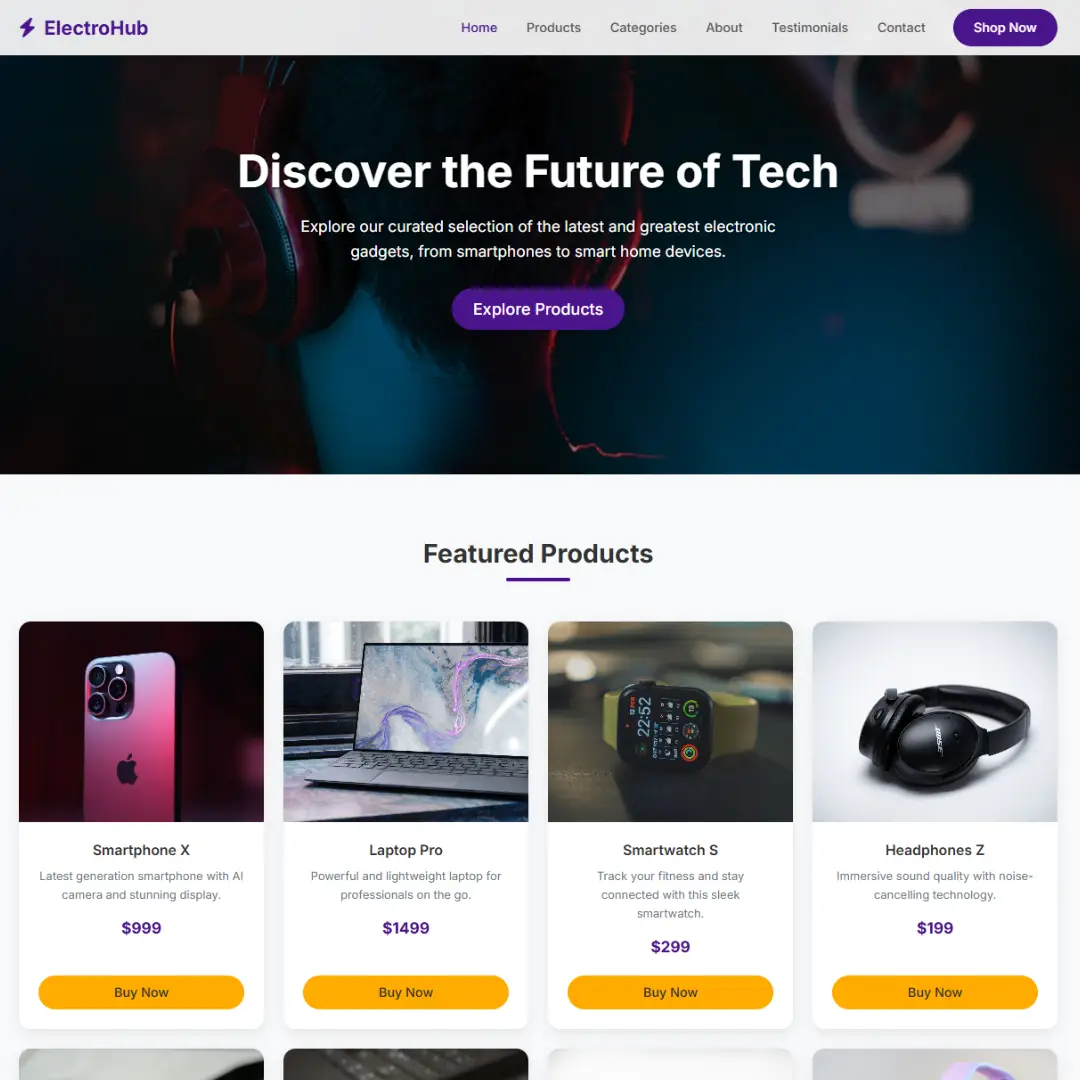

The Pros and Cons of Building an Application with Apache Spark

Apache Spark is a fast, lightweight, and scalable data processing engine that you can use to build big data applications. It can handle large amounts of data quickly and efficiently, making it a great choice for crunching numbers in real time. However, Spark also has some downsides, so it's important to understand all of its benefits and drawbacks before using it in your project.

Here are the pros and cons of using Apache Spark:

Pros:

- Very fast: Apache Spark can process large amounts of data quickly and efficiently.

- Lightweight: Apache Spark is lightweight and easy to use, making it a great choice for building big data applications.

- Scalable: Apache Spark can grow with your data size, making it a good option for larger projects.

Cons:

- Not well suited for complex tasks: While Apache Spark can handle complex tasks quickly, it's not well suited for more complicated tasks. If you need more complex features than Spark offers, consider another option.

The Difference between Hadoop and Spark

Apache Spark is a computing platform that can be used to process large data sets faster than traditional computing systems. The key difference between Apache Spark and Hadoop is that Apache Spark can be used to process data in a batch or streaming mode. This means that you can process data quickly and scale your processing as needed.

In addition, Apache Spark offers a variety of features that make it ideal for data analysis. For example, Spark can be used for text processing, machine learning, and forecasting.

How can Apache Spark be used for data analysis?

There are many ways that Apache Spark can be used for data analysis.

One way that it can be used is for machine learning. In machine learning, Apache Spark can be used to process large datasets and train artificial intelligence (AI) algorithms.

Another use for Apache Spark is in graph processing. Graph processing involves using graphs to analyze data. For example, graphs can be used to understand relationships between different pieces of information.

Conclusion

Apache spark is an exciting new platform that has the potential to change how data is processed in big organizations. This simple guide will help you get started with Apache Spark, providing you with the knowledge you need to make it work for your specific needs. If you're looking to take your data processing to the next level, this guide is for you!

That’s a wrap!

Thank you for taking the time to read this article! I hope you found it informative and enjoyable. If you did, please consider sharing it with your friends and followers. Your support helps me continue creating content like this.

Stay updated with our latest content by signing up for our email newsletter! Be the first to know about new articles and exciting updates directly in your inbox. Don't miss out—subscribe today!

If you'd like to support my work directly, you can buy me a coffee . Your generosity is greatly appreciated and helps me keep bringing you high-quality articles.

Thanks!

Faraz 😊